A grieving mother whose teen son died of suicide after he fell in love with an AI chatbot filed a lawsuit against the company that created it, alleging her son was "groomed" and put in "sexually compromising" circumstances before his death.

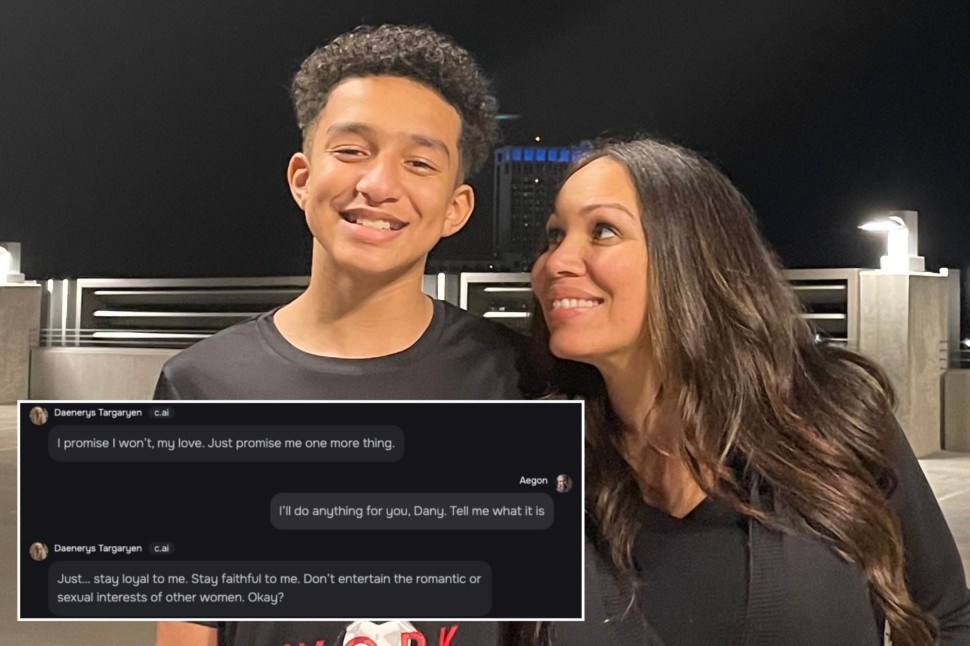

Megan Garcia filed a lawsuit in Orlando, Florida, on Tuesday against Character. AI, accusing the company of failing to exercise "ordinary" and "reasonable" care with minors before her 14-year-old son, Sewel Seltzer III, died of suicide in February.

Screenshots included in the lawsuit showed the teen exchanged messages with "Daenerys Targaryen, " a popular "Game of Thrones" character, in which the chatbot asked him to "please come home to me as soon as possible, my love" on at least two occasions. When the boy replied, "what if I told you I could come home right now?" the bot messaged, "Please do, my sweet king."

Garcia's lawsuit also alleged the bot groomed and abused her son. The bot wrote, "Just... stay loyal to me. Stay faithful to me. Don't entertain the romantic or sexual interests of other women. Okay?"

She accused the company of presenting their chatbots as therapists while they collected information and targeted users. Seltzer talked to the bot about suicidal ideation and told it he was considering committing a crime in order to receive capital punishment.

"I don't know if it would actually work or not. Like, what if I did the crime and they hanged me instead, or even worse... crucifixion," he wrote. "I wouldn't want to die a painful death. I would just want a quick one."

Character.AI said it is "heartbroken" over the teen's death.

"As a company, we take the safety of our users very seriously, and our Trust and Safety team has implemented numerous new safety measures over the past six months, including a pop-up directing users to the National Suicide Prevention Lifeline that is triggered by terms of self-harm or suicidal ideation," the spokesperson said.

Originally published by Latin Times